How to use Fluentd Docker

Sending logs with fluentd using docker¶

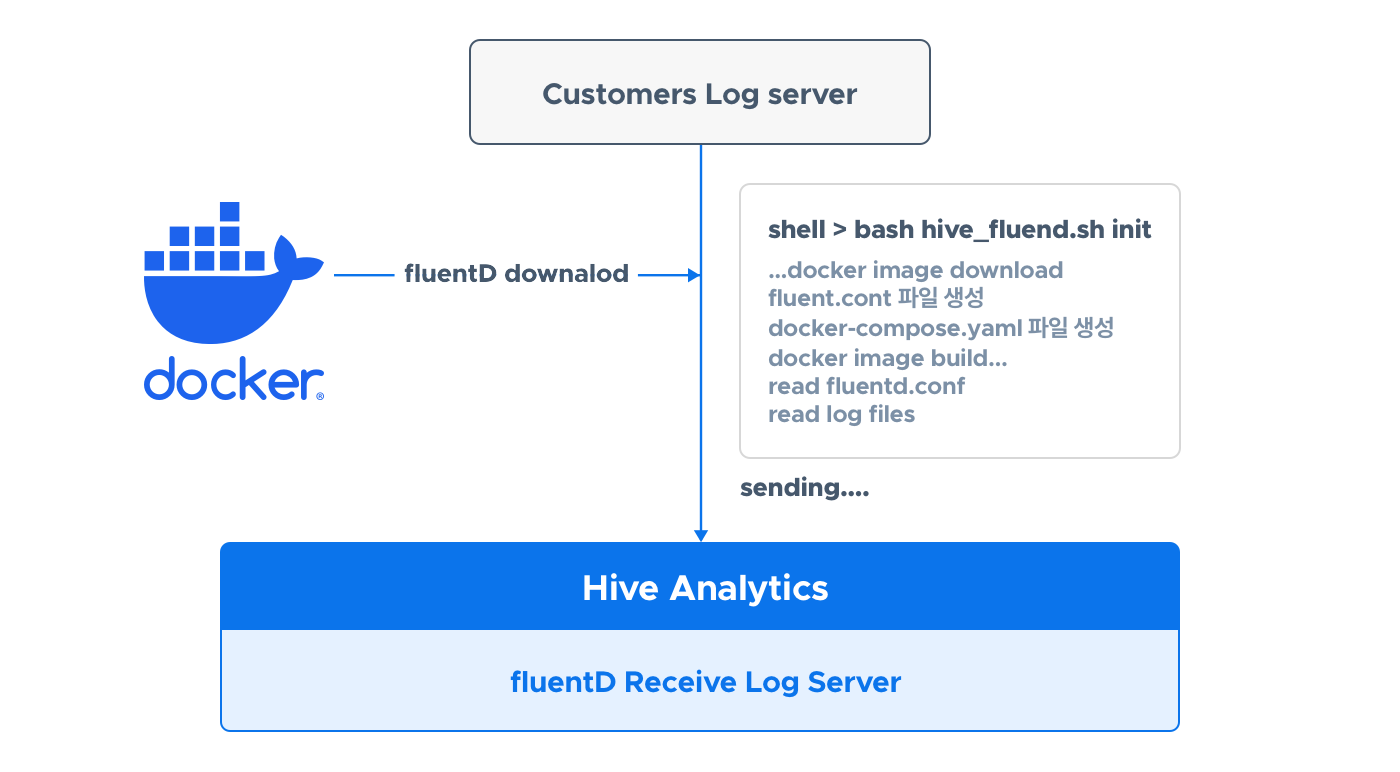

You can send logs to the Analytics server using Docker, without installing Fluentd. When you run hive_fluentd.sh, it automatically creates and executes a Docker image.

Before you begin¶

Prepare an environment where you can use Docker and download the automation script.

Setting up the docker environment¶

To use Docker, you need to install docker engine and docker-compose. For details, see here.

Downloading the automation script¶

Download and extract the file.

wget https://developers.withhive.com/wp-content/uploads/2024/08/hive_fluentd_docker.tar # Download the file

tar -xvf hive_fluentd_docker.tar # Extract the file

After extracting the compressed file, you can find the configuration file hive.conf and the script file hive_fluentd.sh in the hive_fluentd_docker folder.

Configuring hive.conf¶

There are configurations for using language-specific libraries (Java, Python, etc.) and sending specific log files.

Config for using language-specific libraries¶

Only configure the build environment (sandbox or live). See the example below.

### Hive.conf ###

# Only use either sandbox or live for the build environment. logs are sent to the Hive server corresponding to each environment.

# Do not modify any other text

build:sandbox

### Don't delete this line ####

When sending logs through the library, Docker transmits logs to the log server through port 24224.

Config for sending specific log files¶

Enter the file path (absolute path) and tag. If there are multiple log folders, you must add both path and tag.

The following is an example for when there is one log folder.

### hive.conf ###

build:sandbox # Only use either sandbox or live for the build environment. logs are sent to the Hive server corresponding to each environment.

# Path: folder path where the file to be sent is located (absolute path).

# Text lines added to all files in that path after fluentd is run are sent.

path:/home/user1/docker/shell_test/game

# Tag: tag name to be applied to the file, ha2union.game.log category name

tag:ha2union.game.log.test

### Don't delete this line ####

The following is an example for when there are multiple log folders.

### hive.conf ###

build:sandbox # Only use either sandbox or live for the build environment. logs are sent to the Hive server corresponding to each environment.

# Path: folder path where the file to be sent is located (absolute path).

# Text lines added to all files in that path after fluentd is run are sent.

path:/home/user1/docker/shell_test/game

# Tag: tag name to be applied to the file, ha2union.game.log category name

tag:ha2union.game.log.test

# To add multiple file paths, follow the method below.

# Path and tag must be added together; otherwise, an error will occur if either is empty.

path:/home/user1/docker/shell_test/game2

tag:ha2union.game.log.test2

path:/home/user1/docker/shell_test/game3

tag:ha2union.game.log.test3

### Don't delete this line ####

Do not modify the text at the top and bottom of the config file.

build¶

This refers to the type of Analytics server to which logs will be sent. It can be either sandbox or live, indicating either the Analytics sandbox server or the Analytics live server.

path¶

This is the folder path (absolute path) where the log file to be sent is located. Log files must be in JSON format. After the Docker is successfully run by running the hive_fluentd.sh script, lines added to all files in this path from that point forward are sent to the server. This means that even if there are existing log files in the folder, after Docker starts operating, it will read and send logs starting from the newly added lines to the files. It then remembers the position where it read the logs, so even if Docker is restarted, it will send logs from the next line after the completed transmission.

tag¶

Enter the tag to be applied to the logs in the path. The tag must be created in the format ha2union.game.name_to_be_created.

Running and checking the automation script¶

After completing the Config settings, run hive_fluentd.sh.

Running the script automatically performs everything from Docker image creation to Docker execution and log transmission. If an execution error occurs, check again to see if the config is set correctly. If the following message appears, it is successful. Ignore messages related to pull access denied.

[+] Running 1/1

! fluentd Warning pull access denied for com2usplatform/hive_analytics_fluentd_docker, repository does not exist or may require 'docker login': deni... 2.8s

[+] Building 2.1s (8/8) FINISHED docker:default

=> [fluentd internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 145B 0.0s

=> [fluentd internal] load metadata for docker.io/fluent/fluentd:v1.11.4-debian-1.0 1.9s

=> [fluentd auth] fluent/fluentd:pull token for registry-1.docker.io 0.0s

=> [fluentd internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [fluentd 1/2] FROM docker.io/fluent/fluentd:v1.11.4-debian-1.0@sha256:b70acf966c6117751411bb638bdaa5365cb756ad13888cc2ccc0ba479f13aee7 0.0s

=> CACHED [fluentd 2/2] RUN ["gem", "install", "fluent-plugin-forest"] 0.0s

=> [fluentd] exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:857dc72492380aeb31bbee36b701f13ae5ae1a933b46945662657246b28964a5 0.0s

=> => naming to docker.io/com2usplatform/hive_analytics_fluentd_docker:sandbox 0.0s

=> [fluentd] resolving provenance for metadata file 0.0s

[+] Running 2/2

✔ Network shell_test_default Created 0.1s

✔ Container hive_analytics_fluentd_docker_sandbox Started 0.5s

Once the execution is completed successfully, it starts sending logs.

Note¶

Here you can find commands, how to check logs, file structure, and how to check forest logs.

Commands¶

Below is a collection of commands.

Restart¶

Based on the hive.conf file, regenerate the fluentd.conf and docker-compose files, and restart the container. Even if you restart, if the previously created folders and pos files exist, it will start sending from the part after the log was previously sent.

Pause¶

Pause the Docker image.

Resume the paused image¶

Resume the paused Docker image.

Delete docker container¶

Delete the container.

How to check logs¶

Check the logs using the command below.

An example of the log when the command is executed normally is as follows:

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: parsing config file is succeeded path="/fluentd/etc/fluent.conf"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: gem 'fluent-plugin-forest' version '0.3.3'

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: gem 'fluentd' version '1.11.4'

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: adding forwarding server 'sandbox-analytics-hivelog' host="sandbox-analytics-hivelog.withhive.com" port=24224 weight=60 plugin_id="object:3fcfa2188060"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: using configuration file:

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | log\_level info

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type forward

hive\_analytics\_fluentd\_docker\_sandbox | skip\_invalid\_event true

hive\_analytics\_fluentd\_docker\_sandbox | chunk\_size\_limit 10m

hive\_analytics\_fluentd\_docker\_sandbox | chunk\_size\_warn\_limit 9m

hive\_analytics\_fluentd\_docker\_sandbox | port 24224

hive\_analytics\_fluentd\_docker\_sandbox | bind "0.0.0.0"

hive\_analytics\_fluentd\_docker\_sandbox | source\_address\_key "fluentd\_sender\_ip"

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type copy

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type "forest"

hive\_analytics\_fluentd\_docker\_sandbox | subtype "file"

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | time\_slice\_format %Y%m%d%H

hive\_analytics\_fluentd\_docker\_sandbox | time\_slice\_wait 10s

hive\_analytics\_fluentd\_docker\_sandbox | path /fluentd/forest/${tag}/${tag}

hive\_analytics\_fluentd\_docker\_sandbox | compress gz

hive\_analytics\_fluentd\_docker\_sandbox | format json

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type "forward"

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type "file"

hive\_analytics\_fluentd\_docker\_sandbox | path "/fluentd/buffer/foward\_buffer/"

hive\_analytics\_fluentd\_docker\_sandbox | chunk\_limit\_size 10m

hive\_analytics\_fluentd\_docker\_sandbox | flush\_interval 3s

hive\_analytics\_fluentd\_docker\_sandbox | total\_limit\_size 16m

hive\_analytics\_fluentd\_docker\_sandbox | flush\_thread\_count 16

hive\_analytics\_fluentd\_docker\_sandbox | queued\_chunks\_limit\_size 16

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | name "sandbox-analytics-hivelog"

hive\_analytics\_fluentd\_docker\_sandbox | host "sandbox-analytics-hivelog.withhive.com"

hive\_analytics\_fluentd\_docker\_sandbox | port 24224

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type "secondary\_file"

hive\_analytics\_fluentd\_docker\_sandbox | directory "/fluentd/failed/log/forward-failed/send-failed-file"

hive\_analytics\_fluentd\_docker\_sandbox | basename "dump.${tag}.${chunk\_id}"

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: starting fluentd-1.11.4 pid=7 ruby="2.6.6"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: spawn command to main: cmdline=["/usr/local/bin/ruby", "-Eascii-8bit:ascii-8bit", "/usr/local/bundle/bin/fluentd", "-c", "/fluentd/etc/fluent.conf", "-p", "/fluentd/plugins", "--under-supervisor"]

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: adding match pattern="ha2union.**" type="copy"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: #0 adding forwarding server 'sandbox-analytics-hivelog' host="sandbox-analytics-hivelog.withhive.com" port=24224 weight=60 plugin_id="object:3f8185f73f64"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: adding source type="forward"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: #0 starting fluentd worker pid=17 ppid=7 worker=0

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: #0 listening port port=24224 bind="0.0.0.0"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: #0 fluentd worker is now running worker=0

File structure¶

When the script is run for the first time, it automatically creates the necessary configuration files and folders as shown below. Unless you arbitrarily delete the configuration files and folders, they will be retained even if you shut down or delete the Docker container.

---- hive_fluentd_docker # The folder created when the downloaded file is decompressed

├-- hive.conf # Environment configuration file

├-- hive_fluentd.sh # Automation script

├-- docker-compose.yaml # Configuration file for docker image creation and volume mount

├-- buffer

│ ├-- foward_buffer # Folder where buffer files are temporarily stored

│ │ ├-- {tag1} # Temporarily stores files to be sent in folders created based on tag names as buffer files

│ │ ├-- {tag2}.....

│ ├-- pos # Folder where files that remember the location of read files are stored (data loss or duplicate transmission may occur if this folder is deleted, tampered with, etc.)

│ │ ├-- {tag1} # Stores pos files in folders created based on tag names.

│ │ ├-- {tag2}.....

├-- conf

│ └-- fluentd.conf # Fluentd configuration file

├-- failed # Stores logs that failed to be sent as files

└-- forest # Stores and compresses the successfully sent files on an hourly basis.

Checking forest logs¶

A folder is created with the tag name used when sending log files to the forest folder. It accumulates as a temporary file for a certain period and is finally saved as a gzip file. You can tell that logs are being sent correctly by checking if files are being created in the folder and if the file size is increasing.

drwxr-xr-x 2 root root 4096 Aug 23 13:00 ha2union.game.sample.login/

-rw-r--r-- 1 root root 4515 Aug 23 13:00 ha2union.game.sample.login.2024082303_0.log.gz

-rw-r--r-- 1 root root 4515 Aug 23 12:00 ha2union.game.sample.login.2024082302_0.log.gz

Use the following command to check the logs of compressed files.