如何使用 Fluentd Docker

使用 Docker 通過 fluentd 發送日誌¶

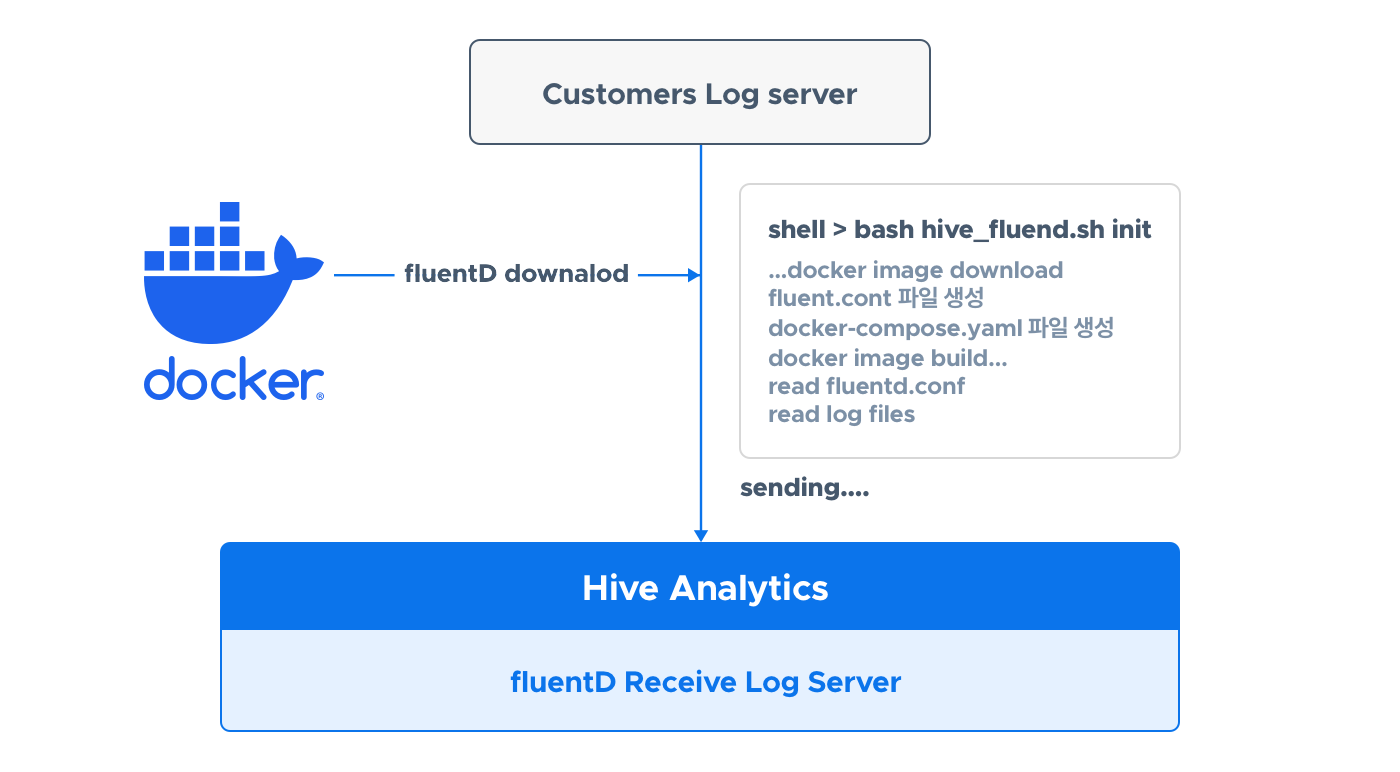

您可以使用 Docker 将日志发送到分析服务器,而无需安装 Fluentd。当您运行 hive_fluentd.sh 时,它会自动创建并执行一个 Docker 镜像。

在你開始之前¶

準備一個可以使用 Docker 的環境並下載自動化腳本。

設定 Docker 環境¶

要使用 Docker,您需要安装 docker engine 和 docker-compose。有关详细信息,请参见 here。

下載自動化腳本¶

下載並提取檔案。

wget https://developers.withhive.com/wp-content/uploads/2024/08/hive_fluentd_docker.tar # Download the file

tar -xvf hive_fluentd_docker.tar # Extract the file

提取压缩文件后,您可以在hive_fluentd_docker文件夹中找到配置文件hive.conf和脚本文件hive_fluentd.sh。

配置 hive.conf¶

有關使用特定語言的庫(Java、Python等)和發送特定日誌文件的配置。

使用特定語言庫的配置¶

僅配置構建環境(sandbox 或 live)。請參見下面的示例。

### Hive.conf ###

# Only use either sandbox or live for the build environment. logs are sent to the Hive server corresponding to each environment.

# Do not modify any other text

build:sandbox

### Don't delete this line ####

當透過庫發送日誌時,Docker 通過 24224 端口將日誌傳輸到日誌伺服器。

發送特定日誌文件的配置¶

輸入文件路徑(絕對路徑)和標籤。如果有多個日誌文件夾,您必須同時添加路徑和標籤。

以下是當只有一個日誌資料夾時的範例。

### hive.conf ###

build:sandbox # Only use either sandbox or live for the build environment. logs are sent to the Hive server corresponding to each environment.

# Path: folder path where the file to be sent is located (absolute path).

# Text lines added to all files in that path after fluentd is run are sent.

path:/home/user1/docker/shell_test/game

# Tag: tag name to be applied to the file, ha2union.game.log category name

tag:ha2union.game.log.test

### Don't delete this line ####

以下是當有多個日誌文件夾時的示例。

### hive.conf ###

build:sandbox # Only use either sandbox or live for the build environment. logs are sent to the Hive server corresponding to each environment.

# Path: folder path where the file to be sent is located (absolute path).

# Text lines added to all files in that path after fluentd is run are sent.

path:/home/user1/docker/shell_test/game

# Tag: tag name to be applied to the file, ha2union.game.log category name

tag:ha2union.game.log.test

# To add multiple file paths, follow the method below.

# Path and tag must be added together; otherwise, an error will occur if either is empty.

path:/home/user1/docker/shell_test/game2

tag:ha2union.game.log.test2

path:/home/user1/docker/shell_test/game3

tag:ha2union.game.log.test3

### Don't delete this line ####

請勿修改配置文件頂部和底部的文本。

build¶

這是指將日誌發送到的分析伺服器類型。它可以是sandbox或live,表示分析沙盒伺服器或分析實時伺服器。

路徑¶

這是要發送的日誌文件所在的資料夾路徑(絕對路徑)。日誌文件必須是 JSON 格式。在成功運行 hive_fluentd.sh 腳本後,從那時起,該路徑下所有文件中新增的行將被發送到伺服器。這意味著即使資料夾中已經存在日誌文件,在 Docker 開始運行後,它將從新增的行開始讀取並發送日誌。然後,它會記住讀取日誌的位置,因此即使 Docker 重新啟動,它也會從完成傳輸後的下一行開始發送日誌。

標籤¶

輸入要應用於path中的日誌的tag。tag必須以格式ha2union.game.name_to_be_created創建。

執行和檢查自動化腳本¶

完成配置設定後,運行hive_fluentd.sh。

自動執行腳本會執行從 Docker 映像創建到 Docker 執行和日誌傳輸的所有操作。如果發生執行錯誤,請再次檢查配置是否正確設置。如果出現以下消息,則表示成功。忽略與 pull access denied 相關的消息。

[+] Running 1/1

! fluentd Warning pull access denied for com2usplatform/hive_analytics_fluentd_docker, repository does not exist or may require 'docker login': deni... 2.8s

[+] Building 2.1s (8/8) FINISHED docker:default

=> [fluentd internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 145B 0.0s

=> [fluentd internal] load metadata for docker.io/fluent/fluentd:v1.11.4-debian-1.0 1.9s

=> [fluentd auth] fluent/fluentd:pull token for registry-1.docker.io 0.0s

=> [fluentd internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [fluentd 1/2] FROM docker.io/fluent/fluentd:v1.11.4-debian-1.0@sha256:b70acf966c6117751411bb638bdaa5365cb756ad13888cc2ccc0ba479f13aee7 0.0s

=> CACHED [fluentd 2/2] RUN ["gem", "install", "fluent-plugin-forest"] 0.0s

=> [fluentd] exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:857dc72492380aeb31bbee36b701f13ae5ae1a933b46945662657246b28964a5 0.0s

=> => naming to docker.io/com2usplatform/hive_analytics_fluentd_docker:sandbox 0.0s

=> [fluentd] resolving provenance for metadata file 0.0s

[+] Running 2/2

✔ Network shell_test_default Created 0.1s

✔ Container hive_analytics_fluentd_docker_sandbox Started 0.5s

一旦執行成功完成,它將開始發送日誌。

注意¶

在這裡您可以找到命令、如何檢查日誌、文件結構,以及如何檢查forest日誌。

命令¶

以下是命令的集合。

重新啟動¶

根據hive.conf文件,重新生成fluentd.conf和docker-compose文件,並重新啟動容器。即使重新啟動,如果之前創建的文件夾和pos文件存在,它將從之前發送日誌後的部分開始發送。

暫停¶

暫停 Docker 映像。

恢復暫停的影像¶

恢復已暫停的 Docker 映像。

刪除 Docker 容器¶

刪除容器。

如何檢查日誌¶

使用以下命令检查日志。

正常執行命令時的日誌範例如下:

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: parsing config file is succeeded path="/fluentd/etc/fluent.conf"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: gem 'fluent-plugin-forest' version '0.3.3'

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: gem 'fluentd' version '1.11.4'

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: adding forwarding server 'sandbox-analytics-hivelog' host="sandbox-analytics-hivelog.withhive.com" port=24224 weight=60 plugin_id="object:3fcfa2188060"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: using configuration file:

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | log\_level info

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type forward

hive\_analytics\_fluentd\_docker\_sandbox | skip\_invalid\_event true

hive\_analytics\_fluentd\_docker\_sandbox | chunk\_size\_limit 10m

hive\_analytics\_fluentd\_docker\_sandbox | chunk\_size\_warn\_limit 9m

hive\_analytics\_fluentd\_docker\_sandbox | port 24224

hive\_analytics\_fluentd\_docker\_sandbox | bind "0.0.0.0"

hive\_analytics\_fluentd\_docker\_sandbox | source\_address\_key "fluentd\_sender\_ip"

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type copy

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type "forest"

hive\_analytics\_fluentd\_docker\_sandbox | subtype "file"

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | time\_slice\_format %Y%m%d%H

hive\_analytics\_fluentd\_docker\_sandbox | time\_slice\_wait 10s

hive\_analytics\_fluentd\_docker\_sandbox | path /fluentd/forest/${tag}/${tag}

hive\_analytics\_fluentd\_docker\_sandbox | compress gz

hive\_analytics\_fluentd\_docker\_sandbox | format json

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type "forward"

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type "file"

hive\_analytics\_fluentd\_docker\_sandbox | path "/fluentd/buffer/foward\_buffer/"

hive\_analytics\_fluentd\_docker\_sandbox | chunk\_limit\_size 10m

hive\_analytics\_fluentd\_docker\_sandbox | flush\_interval 3s

hive\_analytics\_fluentd\_docker\_sandbox | total\_limit\_size 16m

hive\_analytics\_fluentd\_docker\_sandbox | flush\_thread\_count 16

hive\_analytics\_fluentd\_docker\_sandbox | queued\_chunks\_limit\_size 16

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | name "sandbox-analytics-hivelog"

hive\_analytics\_fluentd\_docker\_sandbox | host "sandbox-analytics-hivelog.withhive.com"

hive\_analytics\_fluentd\_docker\_sandbox | port 24224

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox | @type "secondary\_file"

hive\_analytics\_fluentd\_docker\_sandbox | directory "/fluentd/failed/log/forward-failed/send-failed-file"

hive\_analytics\_fluentd\_docker\_sandbox | basename "dump.${tag}.${chunk\_id}"

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive\_analytics\_fluentd\_docker\_sandbox |

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: starting fluentd-1.11.4 pid=7 ruby="2.6.6"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: spawn command to main: cmdline=["/usr/local/bin/ruby", "-Eascii-8bit:ascii-8bit", "/usr/local/bundle/bin/fluentd", "-c", "/fluentd/etc/fluent.conf", "-p", "/fluentd/plugins", "--under-supervisor"]

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: adding match pattern="ha2union.**" type="copy"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: #0 adding forwarding server 'sandbox-analytics-hivelog' host="sandbox-analytics-hivelog.withhive.com" port=24224 weight=60 plugin_id="object:3f8185f73f64"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: adding source type="forward"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: #0 starting fluentd worker pid=17 ppid=7 worker=0

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: #0 listening port port=24224 bind="0.0.0.0"

hive_analytics_fluentd_docker_sandbox | 2024-08-19 07:19:31 +0000 [info]: #0 fluentd worker is now running worker=0

文件結構¶

當腳本第一次運行時,它會自動創建必要的配置文件和文件夾,如下所示。除非您任意刪除配置文件和文件夾,否則即使您關閉或刪除Docker容器,它們也會被保留。

---- hive_fluentd_docker # The folder created when the downloaded file is decompressed

├-- hive.conf # Environment configuration file

├-- hive_fluentd.sh # Automation script

├-- docker-compose.yaml # Configuration file for docker image creation and volume mount

├-- buffer

│ ├-- foward_buffer # Folder where buffer files are temporarily stored

│ │ ├-- {tag1} # Temporarily stores files to be sent in folders created based on tag names as buffer files

│ │ ├-- {tag2}.....

│ ├-- pos # Folder where files that remember the location of read files are stored (data loss or duplicate transmission may occur if this folder is deleted, tampered with, etc.)

│ │ ├-- {tag1} # Stores pos files in folders created based on tag names.

│ │ ├-- {tag2}.....

├-- conf

│ └-- fluentd.conf # Fluentd configuration file

├-- failed # Stores logs that failed to be sent as files

└-- forest # Stores and compresses the successfully sent files on an hourly basis.

檢查森林日誌¶

當發送日誌文件到 forest 文件夾時,會創建一個使用 tag 名稱的文件夾。它作為臨時文件累積一段時間,最終保存為 gzip 文件。您可以通過檢查文件夾中是否創建了文件以及文件大小是否在增加來判斷日誌是否正確發送。

drwxr-xr-x 2 root root 4096 Aug 23 13:00 ha2union.game.sample.login/

-rw-r--r-- 1 root root 4515 Aug 23 13:00 ha2union.game.sample.login.2024082303_0.log.gz

-rw-r--r-- 1 root root 4515 Aug 23 12:00 ha2union.game.sample.login.2024082302_0.log.gz

使用以下命令檢查壓縮文件的日誌。